‘Signal to noise ratio’ is a long-established metric in science and engineering. SNR compares the level of a required signal to the level of background noise. May 2021 saw the release of the much-anticipated book ‘Noise’ written by Nobel Memorial Prize winner and ‘Thinking, Fast and Slow’ author, Daniel Kahneman and co-authored by Olivier Sibony and Cass R. Sunstein. Adopting the SNR terminology, the book focuses on the concept of ‘noise’ as interference in human judgement. The book concludes with several recommendations on how to improve decision-making. Applying these lessons is described by the authors as ‘decision hygiene’.

Noise vs Bias

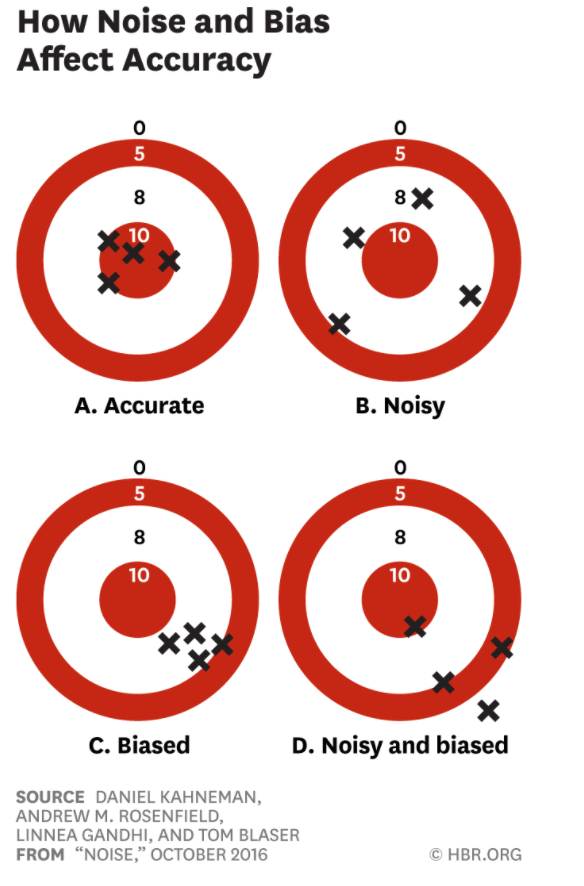

This book makes an important distinction between bias and noise, two components of error that interfere with human judgement. The difference in the ways in which these errors can manifest is nicely illustrated in Figure 1, analogous to the familiar ‘bull’s eye’ target. ‘Bias’ is reflected in systemic deviation in the accuracy of judgements (C – grouped, but off target), whilst ‘Noise’ reflects random scatter (B – ungrouped and off target).

Figure 1. Noise and Bias (Kahneman et al., October 2016)

The topic of ‘bias’ in decision making has received a lot of attention in recent years. Bias can manifest in various forms, including confirmation bias, affinity bias and loss aversion bias. In contrast to noise, decisions heavily influenced by bias can appear highly consistent, and invite causal explanations as a result. The goal of Kahneman et al. (2021) is to draw attention to the distinct and equally potent issues arising from noise; the implications of failing to do this are immense.

Noise, argue the authors, is everywhere. It plagues professions including criminal justice, insurance underwriting, forensic science, futurism, medicine, and human resources. Kahneman et al. (2021) document in detail the large and growing body of literature demonstrating how people working side-by-side in the same job can make widely varied judgements about similar cases that often prove costly, life changing, and in some industries, fatal.

Minimising ‘noise’ has been a key objective in the application of psychometrics. The challenges in evaluating individual differences in abilities, competencies and personalities and their significance for personal, team and organisational success are considerable. Subjectivity, inconsistency, and bias are major contributors to the noise in personnel decision making. Psychometric assessment’s aim for consistency in process, methodology and measurement criteria parallel the aim of ‘noise’ reduction. In the context of psychometrics, the parallel term is ‘reliability’. “An indicator of the consistency which a test or procedure provides. It is possible to quantify reliability to indicate the extent to which a measure is free from error” Arnold et al. (2020).

Noise and bias are inevitable, but efforts should still be made to reduce these distortions by maximising reliability and validity. There are several sub-categories of reliability that psychometrics must consider, including test retest, internal consistency, and split half reliability. The more ‘noisy’ a test is, the greater amount of unwanted variance its results will generate. An example of this in test-retest terms would be the desire to minimise differences in results between two test administrations for the same participant. A more thorough breakdown of these reliability sub-categories can be found in a PCL blog piece here.

Noisy Selection

The most relevant area to our psychometric assessment industry that Kahneman et al. (2021) focus on is selection. Unsurprisingly, unstructured interviews come in for heavy criticism. Using competency-based interviews with multiple assessors can significantly boost quality, but a degree of noise and bias will always be present. A meta-analysis of employment interview reliability found an average inter-assessor correlation of .74, suggesting that even when two interviewers observe the same two candidates, they will still disagree about who was stronger about one-quarter of the time (Huffcutt et al., 2013).

One specific example resulted from a candidate who was asked in two separate interviews by two separate assessors why he had left his short-lived former CFO role. His response was that he had a “strategic disagreement with the CEO”. When the two assessors met to compare notes, the first had perceived the candidate’s decision as a sign of integrity and courage, yet the second had construed the response as a sign of inflexibility and potential immaturity. Even when stimuli are consistent, prior attitudes influence our interpretation of facts.

Kahneman et al. (2021) also discuss how prior attitudes can influence whether salient facts emerge to begin with. Research has indicated the striking impact first impressions have on interviewers’ perceptions of candidates and may dictate whether certain evidence is sought. Even if competencies around team working are identified as predictors of role performance, interviewers may be less inclined to ask tougher questions in pursuit of this information if they perceive the candidate to be cheerful and gregarious in the opening informal exchanges.

These examples illustrate that, whilst structured competency-based interviews improve the accuracy of selection decisions, it is advisable to apply ‘decision hygiene’ by supplementing them with additional methods. Psychometrics are used to improve selection processes, not least because they can reduce noise. If two or more candidates submit the same responses to a set of psychometric items, they are guaranteed to receive identical outputs regardless of the gender, age, or ethnicity of the candidate or the assessor.

Algorithmic Aggregation

So far, we have established that selection judgements will be more accurate when several sources of relevant information are considered and aggregated, preferably by multiple assessors. But when considering multiple metrics, how they are aggregated is key.

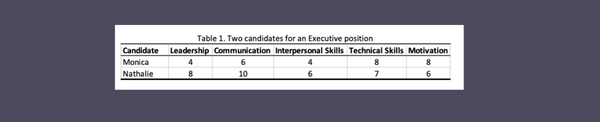

Kahneman et al. (2021) differentiate between ‘clinical’ and ‘mechanical’ aggregation and demonstrate this distinction by presenting the reader with ratings received by two subsequently hired candidates (see Table 1. below). Two years later, the reader is asked to predict the stronger performer.

The temptation is to eyeball these various evaluations and provide a judgement that seems right. This informal approach of combining a quick computation with internal intuitive consultation is an example of ‘clinical’ aggregation. Practitioners adopting this approach are likely to conclude that ‘Nathalie’ outperformed ‘Monica’, yet ‘mechanical’ aggregation indicated that ‘Technical Skills’ and ‘Motivation’ had far greater power in predicting performance. A prediction derived from ‘mechanical’ aggregation would correctly conclude that ‘Monica’ would be the stronger performer in the Executive role.

Interestingly, this example is loosely based on a study by Yu and Kuncel (2020). Doctoral-level psychologists were given real data from hired candidates and asked to predict subsequent performance ratings. These predictions correlated at ‘.15’ with performance ratings, which meant that participants were only able to predict the higher performer in an employee pairing 55% of the time; not much better than a 50/50 chance!

Whilst blame could be levied at the limited validity of competency scores, it is possible to significantly increase the predictive power of this data using the statistical method of ‘multiple regression’. This method uses the optimum set of weighted averages to maximise the correlation between the predictors and the target performance variable. The better the predictor, the stronger the weighting, and useless predictors get a weight of zero. This is an example of a ‘mechanical’ aggregation.

Examples of this approach to aggregation range in complexity, and the most commonly used ‘linear regression models’ have been labelled ‘the workhorse of judgement and decision-making research’ (Hogarth & Karelaia, 2007). In the study, the mechanically aggregated predictions correlated ‘.32’ with performance, reflecting a superior, albeit still limited, approach to prediction.

This approach is a key feature of PCL’s Profile:Match2 personality psychometric. PM2 translates personality into competencies by (1) identifying which elements of personality are relevant, (2) accounting for how these elements of personality relate to the competency, and (3) contributing the optimal weighting of the personality element to the competency score. This approach applies some of the principles of ‘decision hygiene’ as it utilises mechanical aggregation to maximise the predictive power of the personality data.

So far, we have discussed how decisions can be improved through the use of multiple information sources that are aggregated in a way that reduces noise as much as possible. This leads to another of Kahneman et al.’s (2021) points, which concerns the benefits of incorporating multiple participants in the decision-making process.

The Wisdom of Crowds

Drawing from multiple perspectives is an example of decision hygiene, as it is a potentially powerful strategy for improving judgements. This is illustrated by citing an observation made by Francis Galton in the early 20th century concerning a competition to guess the weight of an ox at a county fair. The resulting guesses varied widely, yet when collated, the crowd’s average guess weight was closer to the actual weight than the vast majority of individual estimates.

Involving multiple participants in the decision-making process brings some powerful benefits, but Kahneman et al. (2021) share a word of caution; the wisdom of the crowd doesn’t guard against bias. If a group shares a bias that manifests as a systematic error in judgement, the mean of the members’ response will only distil and amplify that bias. For example, if an executive board is heavily risk averse, this aversion will appear more significant when the group’s judgements about an investment opportunity are aggregated.

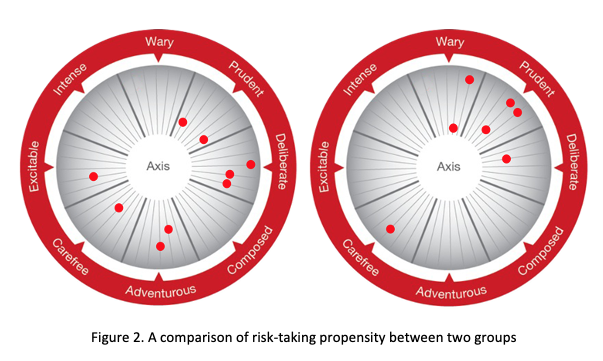

In order to practice decision hygiene, it is important to (1) assess potential biases of individuals, and (2) determine whether, when placed into these teams, these biases are maximised by group bias. This is an area in which PCL’s Risk Type Compass psychometric can help. The RTC assesses an individual’s risk-relevant personality characteristics and provides an in-depth report that includes an illustration indicating where the user is located in a risk-taking framework. This information can also be used to compare the risk-taking propensity of a group, representing a unique insight into potential group bias (see Figure 2. below).

In the comparison illustrated above, the team on the left contains a more temperamentally diverse group of people and the team on the right reflects greater homogeneity. Both bring risks that need to be carefully managed, but management cannot occur without identifying the biases each group brings.

The team on the left will be less likely to fall prey to the group think Kahneman et al. (2021) warn of, but intra-group conflict is more likely if disagreements are not recognised as manifestations of this diversity and welcomed as potentially valuable contributions.

The team on the right will likely experience greater group harmony due to shared member bias, yet this could lead to crucial blind spots about opportunities and threats that may prove detrimental at a group, or even organisational level.

Summary

Significant disruption to the world of work has thrown up many challenges. In the realm of selection, increasing the accuracy of selection judgements will add costs to the process. Yet these costs pale into insignificance compared against the costs of poor decisions.

Effective decision making is more important than ever, and decision hygiene reflects an important discipline that should be incorporated into future strategy. Leveraging the insight afforded by psychometrics is an excellent way to practice decision hygiene, identify sources of noise and bias, and limit their impact on decision making.

What’s All This Noise About?

To learn more about how PCL can help

References

Arnold, J., Coyne, I., Randall, R., & Patterson, F. (2020). Work psychology: Understanding human behaviour in the workplace (7th Ed.) London: Pearson

Hogarth, R. M., & Karelaia, N. (2007). Heuristic and Linear Models of Judgment: Matching Rules and Environments. Psychological Review, 114 (3), 733-758

Huffcutt, A. I., Culbertson, S. S., & Weyhrauch, W. S. (2013). Employment interview reliability: New meta-analytic estimates by structure and format. International Journal of Selection and Assessment, 21(3), 264-276

Kahneman, D., Sibony, O., & Sunstein, C. R. (2021). Noise: A flaw in human judgement. London: Harper Collins

Kahneman, D., Rosenfield, A. M., Gandhi, L., & Blaser, T. (October, 2016). Noise: How to overcome the high, hidden cost of inconsistent decision making. Retrieved July 22, 2021, from https://hbr.org/2016/10/noise

Yu, M. C., & Kuncel, N. R. (2020). Pushing the Limits for Judgmental Consistency: Comparing

Random Weighting Schemes with Expert Judgments. Personnel Assessment and Decisions, 6(2), 1-10